Jaynil Patel

projects

Projects related to Web Applications:

1) HTML Form to Google Drive and Google Sheets

Technologies : HTML, JavaScript, Google Apps Script

Submit data from a customized HTML form to Google Drive and Google Sheets. The text data from

the HTML form will be processed and stored in Google Sheets while files (objects) will be

uploaded to Google Drive. This provides an easy way to store information in Sheets and Drive

using your own HTML page and without using Google Forms.

view github

view github

2) Patient Record System For Personal Clinic

Technologies : Flask, MongoDB, JavaScript ES6, SocketIO

Created a full stack Python web application for maintaining Electronic Medical Records (EMR). I collaborated with

multiple clinical doctors for requirement analysis by understanding their business objectives.

Implemented Python scripts for migrating 100k+ legacy database entries from AccessDB to MongoDB.

Integrated AWS S3 service in Python Flask for managing medical certificates, case invoices and income reports.

This is one of the biggest project I have worked on.

Private Repo

Private Repo

3) Anti-Smokify: Automated Cigarette Detection In Videos

Technologies : Python, Keras, Flask, MongoDB, Docker

Implemented an automated cigarette smoking scene detection applicaion for films to put relevant disclaimer

(“Smoking is Injurious to Health”) within the video. Key tasks include:

Collaborated in design and development of Python web application for video processing and scene extraction.

Built RESTful APIs using Flask and AWS DynamoDB; revamped UI using HTML5, JavaScript ES6, and Bootstrap.

Enhanced CI/CD utilizing Docker compose, reducing build and deployment times by 40%.

Implemented transfer learning by leveraging Keras and analysed the model’s performance metrics.

view github

view github

4) Birla Vishvakarma Mahavidyalaya Website - (My undergraduate college)

Technologies : ASP.NET, CSS, JavaScript

Completely redesigned the existing website of my undergraduate institute under the guidance of a professor.

Made it clean, modern and responsive. Extended the admin panel functionality using CK Editor 4 for ASP.NET

Publishing soon

Publishing soon

5) Know-How '19: Annual College Event (Undergraduate)

Technologies : HTML, CSS, JavaScript, GitHub

Developed website for annual college event for professional developement of students.

The website served as a platform for promoting the event and publishing results of various competitions for the

event.

Projects related to Data Science:

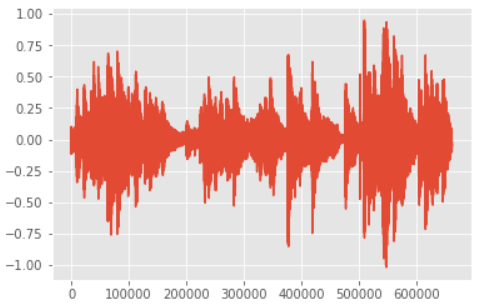

1) audio classifier using deep learning

central language : pythonlibraries/frameworks : tensorflow

An audio classifier that is capable of classifying an audio as music or speech. The dataset consists of 128 tracks, each

30 seconds long. Each class (music/speech) has 64 examples. The tracks are all 22050Hz Mono 16-bit

audio files in .wav format.

The raw audio is processed using discrete Fourier transform. This will return complex coordinates (real and imaginary) which will be then converted into cartesian coordinates indicating magnitude and phase. Finally the resultant values will be converted to a pseudo decibel scale. All these values will be plotted on a Frequency vs Time graph.

A sliding window is created that will snap the Xs and ys (one-hot encoding) of the graph every 250 ms and will store it in a list as inputs to the network. All the collected inputs and labels will then be fed to the model (4 layer CNN + 2 fully connected layer).

This method can be further extended to classify music based on different genres.

Dataset : gtzan-music-speech

view notebook

The raw audio is processed using discrete Fourier transform. This will return complex coordinates (real and imaginary) which will be then converted into cartesian coordinates indicating magnitude and phase. Finally the resultant values will be converted to a pseudo decibel scale. All these values will be plotted on a Frequency vs Time graph.

A sliding window is created that will snap the Xs and ys (one-hot encoding) of the graph every 250 ms and will store it in a list as inputs to the network. All the collected inputs and labels will then be fed to the model (4 layer CNN + 2 fully connected layer).

This method can be further extended to classify music based on different genres.

Dataset : gtzan-music-speech

view notebook

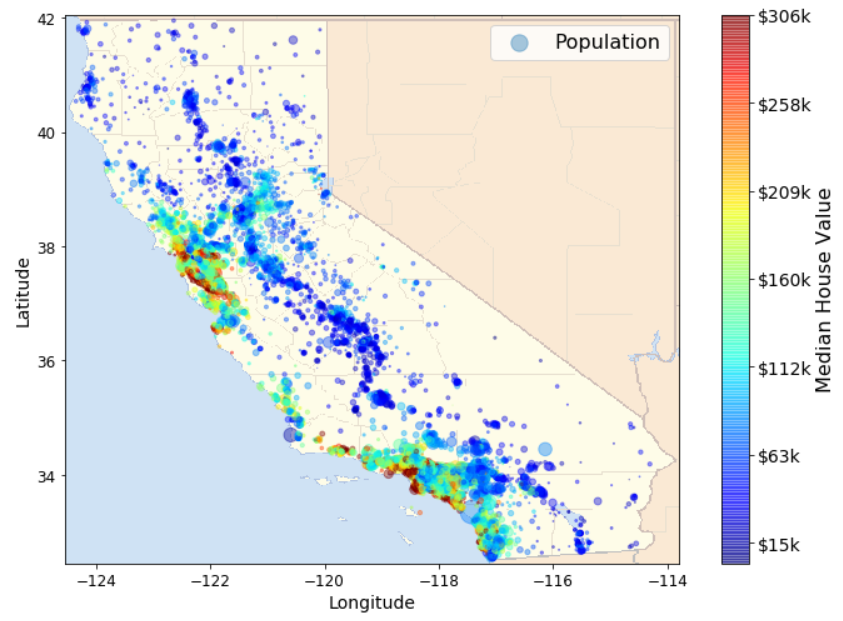

2) an in-depth analysis for prediction of california's housing prices

central language : pythonlibraries/frameworks : scikit-learn, matplotlib

The goal here is to build a machine learning model to predict housing prices in California using the California Census Data.

The data has metrics such as population, median income, median housing prices, and so on. The data

was mostly raw and lacked any form of preprocessing. Hence a lot of time was invested in processing

and cleaning the data, thus getting it ready for a mathematical model to gain insights. The data

was tested with linear-regression, decision-tree-regressor and random-forest-regressor.

view notebook

view notebook

3) machine learning + titanic

central language : pythonlibraries/frameworks : scikit-learn

The challenge here is to complete the analysis of what sorts of people were likely to survive the most infamous shipwrecks

in history. In particular, the goal is to apply the tools of machine learning to predict which passengers

survived the tragedy, i.e to predict if a passenger survived the sinking of the Titanic or not.

view notebook

view notebook

4) implementing batch normalization in tensorflow

central language : pythonlibraries/frameworks : tensorflow

Training Deep Neural Networks is complicated by the fact that the distribution of each layer's inputs changes during training,

as the parameters of the previous layers change. This slows down the training by requiring lower

learning rates and careful parameter initialization, and makes it notoriously hard to train models

with saturating nonlinearities. Batch Normalization is used to address this kind of problems known

as vanishing/exploding gradients problems. The technique consists of adding operations such as zero

centering, normalizing the inputs, scaling and shifting the results. These sequence of operations

are added just before the activation function of each layer of our deep neural network.

According to the original paper by Sergey Ioffe, Google Inc and Christian Szegedy, Google Inc (arXiv:1502.03167 [cs.LG]), Batch Normalization achieves the same accuracy with 14 times fewer training steps, and beats the original model by a significant margin. Using an ensemble of batch-normalized networks, they improve upon the best published result on ImageNet classification: reaching 4.9% top-5 validation error (and 4.8% test error), exceeding the accuracy of human raters.

Here I will be showing you how you can implement batch normalization on the MNIST dataset using tensorflow.

view notebook

According to the original paper by Sergey Ioffe, Google Inc and Christian Szegedy, Google Inc (arXiv:1502.03167 [cs.LG]), Batch Normalization achieves the same accuracy with 14 times fewer training steps, and beats the original model by a significant margin. Using an ensemble of batch-normalized networks, they improve upon the best published result on ImageNet classification: reaching 4.9% top-5 validation error (and 4.8% test error), exceeding the accuracy of human raters.

Here I will be showing you how you can implement batch normalization on the MNIST dataset using tensorflow.

view notebook

5) dog and cat classifier using cnn

central language : pythonlibraries/frameworks : tflearn, opencv

No introduction is needed for this problem, because first, the problem statement is pretty much intuitive, and second, this

is an infamous dogs vs cats classification problem which you would have definitely come acrossed,

if you are into deep learning. The deep learning model which i have used is convolutional neural

network (cnn) since it performs well when dealing with image data. The dataset is available on

kaggle. The resource consists of 25,000 labeled images for training the model and 12,500 images

for testing the model.

view notebook

view notebook

6) mnist - a detailed study of m.l. classification

central language : pythonlibraries/frameworks : scikit-learn

The MNIST dataset is a set of 70,000 small images of digits handwritten by high school students and employees of the US Census

Bureau.Each image is labeled with the digit it represents. It is a perfect database for people who

want to try learning techniques and pattern recognition methods on real-world data while spending

minimal efforts on preprocessing and formatting. Whenever people come up with a new classification

algorithm, they are curious to see how it will perform on MNIST. Whenever someone learns Machine

Learning, sooner or later they tackle MNIST.

view notebook

view notebook

Made using

and